C and Rust

C and Rust

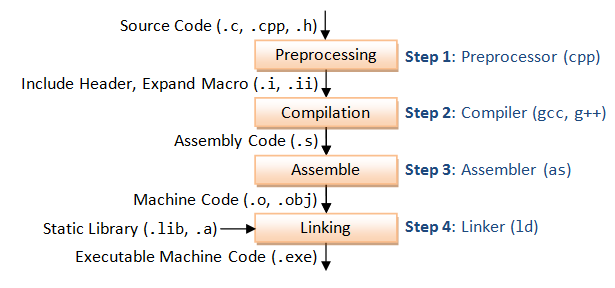

The process of compiling a C program

1. Preprocessing

Preprocessing is the first pass of any C compilation. It removes comments, expands include-files and macros, and processes conditional compilation instructions. This can be output as a .i file.

2. Compilation

Compilation is the second pass. It takes the output of the preprocessor, and the source code, and generates assembler source code (hello.s). Assembly language is a low-level programming language (even lower than C) that is still human-readable but consists of mnemonic instructions that have strong correspondence to machine instructions.

3. Assembly

Assembly is the third stage of compilation. It takes the assembly source code and produces an object file hello.o, which contains actual machine instructions and symbols (e.g., function names) that are no longer human-readable since they are in bits.

4. Linking

Linking is the final stage of compilation. It takes one or more object files or libraries as input and combines them to produce a single (usually executable) file. In doing so, it resolves references to external symbols, assigns final addresses to procedures/functions and variables, and revises code and data to reflect new addresses (a process called relocation).

The process of compiling a Rust program

1. Invocation

Compilation begins when a user writes a Rust source program in text and invokes the rustc compiler on it. The work that the compiler needs to perform is defined by command-line options. For example, it is possible to enable nightly features (-Z flags), perform check-only builds, or emit the LLVM Intermediate Representation (LLVM-IR) rather than executable machine code. The rustc executable call may be indirect through the use of cargo.

Command line argument parsing occurs in the rustc_driver. This crate defines the compile configuration that is requested by the user and passes it to the rest of the compilation process as a rustc_interface::Config.

2. Lexing and parsing

The raw Rust source text is analyzed by a low-level lexer located in rustc_lexer. At this stage, the source text is turned into a stream of atomic source code units known as tokens. The lexer supports the Unicode character encoding.

The token stream passes through a higher-level lexer located in rustc_parse to prepare for the next stage of the compile process. The Lexer struct is used at this stage to perform a set of validations and turn strings into interned symbols. String interning is a way of storing only one immutable copy of each distinct string value.

The lexer has a small interface and doesn't depend directly on the diagnostic infrastructure in rustc. Instead it provides diagnostics as plain data which are emitted in rustc_parse::lexer as real diagnostics. The lexer preserves full fidelity information for both IDEs and procedural macros (sometimes referred to as "proc-macros").

The parser translates the token stream from the lexer into an Abstract Syntax Tree (AST). It uses a recursive descent (top-down) approach to syntax analysis. The crate entry points for the parser are the Parser::parse_crate_mod() and Parser::parse_mod() methods found in rustc_parse::parser::Parser. The external module parsing entry point is rustc_expand::module::parse_external_mod. And the macro-parser entry point is Parser::parse_nonterminal().

Parsing is performed with a set of parser utility methods including bump, check, eat, expect, look_ahead.

Parsing is organized by semantic construct. Separate parse_* methods can be found in the rustc_parse directory. The source file name follows the construct name.

This naming scheme is used across many compiler stages. You will find either a file or directory with the same name across the parsing, lowering, type checking, Typed High-level Intermediate Representation (THIR) lowering, and Mid-level Intermediate Representation (MIR) building sources.

Macro-expansion, AST-validation, name-resolution, and early linting also take place during the lexing and parsing stage.

The rustc_ast::ast::{Crate, Expr, Pat, ...} AST nodes are returned from the parser while the standard Diag API is used for error handling. Generally Rust's compiler will try to recover from errors by parsing a superset of Rust's grammar, while also emitting an error type.

3. AST lowering

Next the AST is converted into High-Level Intermediate Representation (HIR), a more compiler-friendly representation of the AST. This process is called "lowering" and involves a lot of desugaring (the expansion and formalizing of shortened or abbreviated syntax constructs) of things like loops and async fn.

We then use the HIR to do type inference (the process of automatic detection of the type of an expression), trait solving (the process of pairing up an impl with each reference to a trait), and type checking. Type checking is the process of converting the types found in the HIR (hir::Ty), which represent what the user wrote, into the internal representation used by the compiler (Ty<'tcx>). It's called type checking because the information is used to verify the type safety, correctness and coherence of the types used in the program.

4. MIR lowering

The HIR is further lowered to MIR (used for borrow checking) by constructing the THIR (an even more desugared HIR used for pattern and exhaustiveness checking) to convert into MIR.

We do many optimizations on the MIR because it is generic and that improves later code generation and compilation speed. It is easier to do some optimizations at MIR level than at LLVM-IR level. For example LLVM doesn't seem to be able to optimize the pattern the simplify_try MIR-opt looks for.

Rust code is also monomorphized during code generation, which means making copies of all the generic code with the type parameters replaced by concrete types. To do this, we need to collect a list of what concrete types to generate code for. This is called monomorphization collection and it happens at the MIR level.

5. Code generation

We then begin what is simply called code generation or codegen. The code generation stage is when higher-level representations of source are turned into an executable binary. Since rustc uses LLVM for code generation, the first step is to convert the MIR to LLVM-IR. This is where the MIR is actually monomorphized. The LLVM-IR is passed to LLVM, which does a lot more optimizations on it, emitting machine code which is basically assembly code with additional low-level types and annotations added (e.g. an ELF object or WASM). The different libraries/binaries are then linked together to produce the final binary.

This post is licensed under CC BY 4.0 by the author.